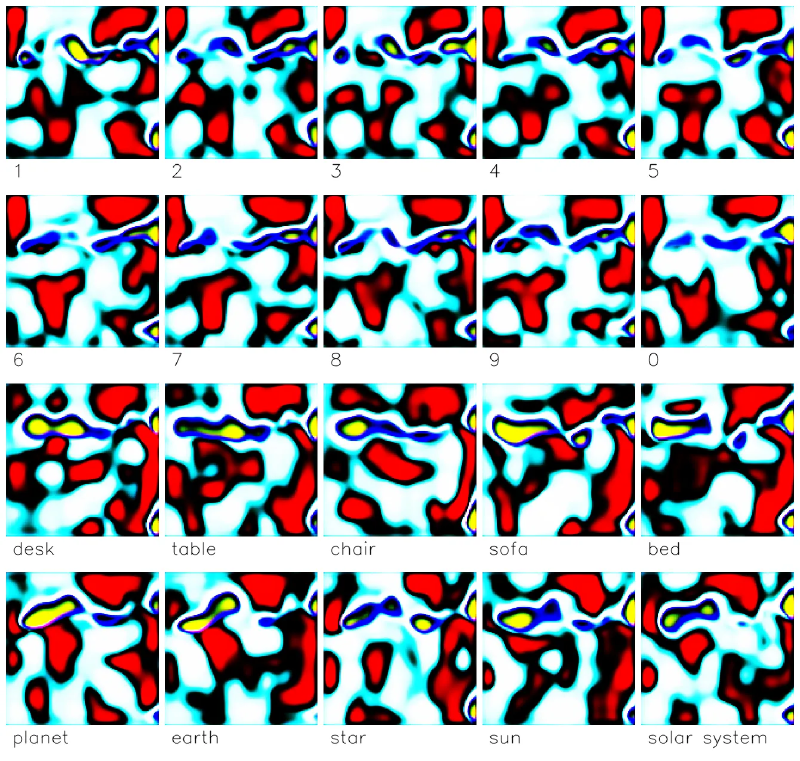

Research project exploring the possibility of creating a continuous, visual language that hint at the possibility of direct understanding of the embedding spaces produced by ML models.

Unlike traditional discrete languages, this system uses machine learning to generate unique visual representations (images) for any given text embedding. The goal is to create a language where concepts can blend into each other continuously, mirroring how neural networks process information.

Words and sentences are represented as points in a continuous vector space, allowing for smooth transitions between concepts. Experiments show that humans can learn to interpret these generated visual embeddings with increasing accuracy over time. The visual language is designed to be perfectly reconstructible back into the original text embeddings by a decoder network crating a bridge between human cognition and the “black box” mechanized interpretation of the data.

Status: Completed Experiment