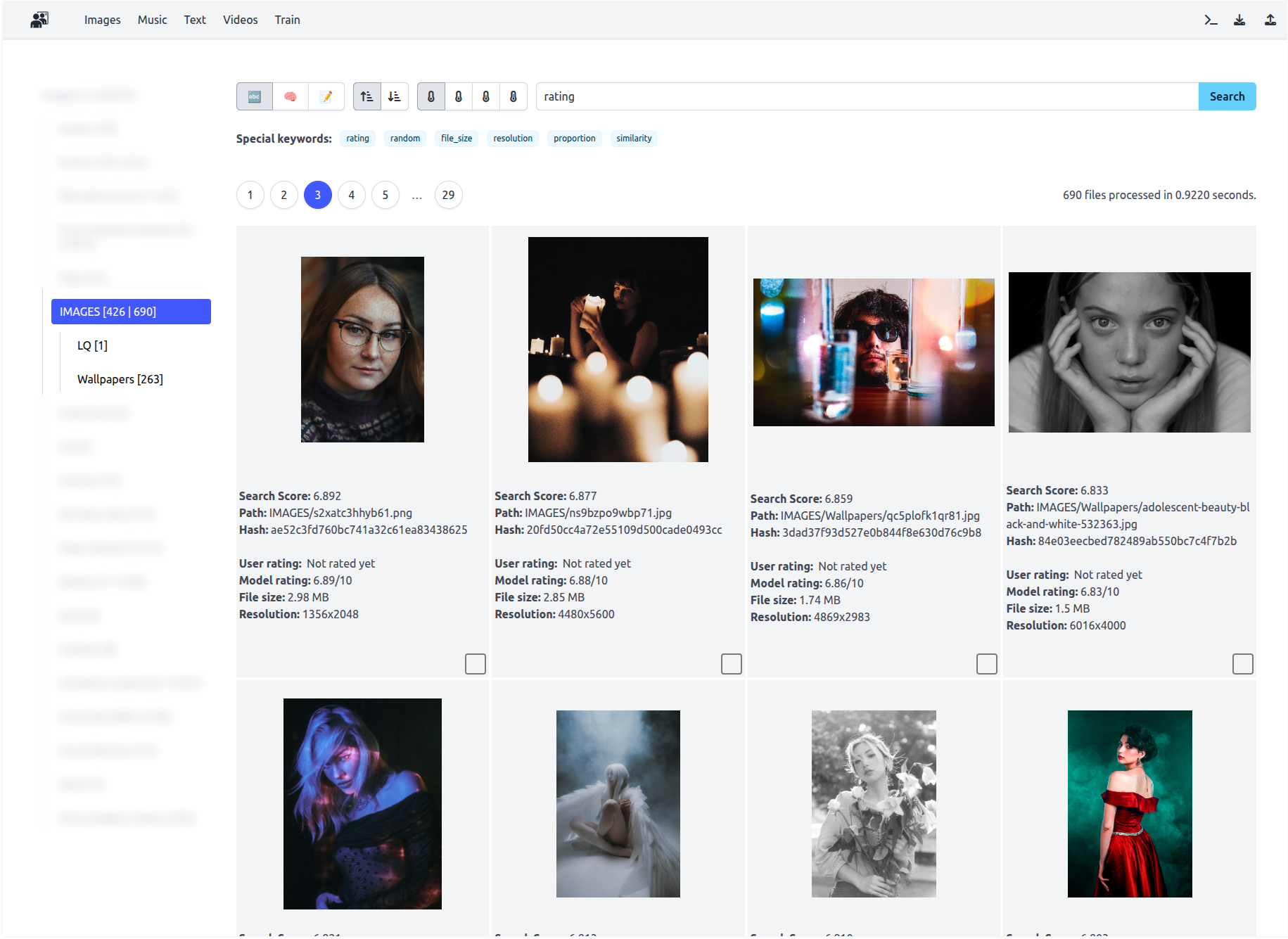

Completely local data-management platform with built in trainable recommendation engine.

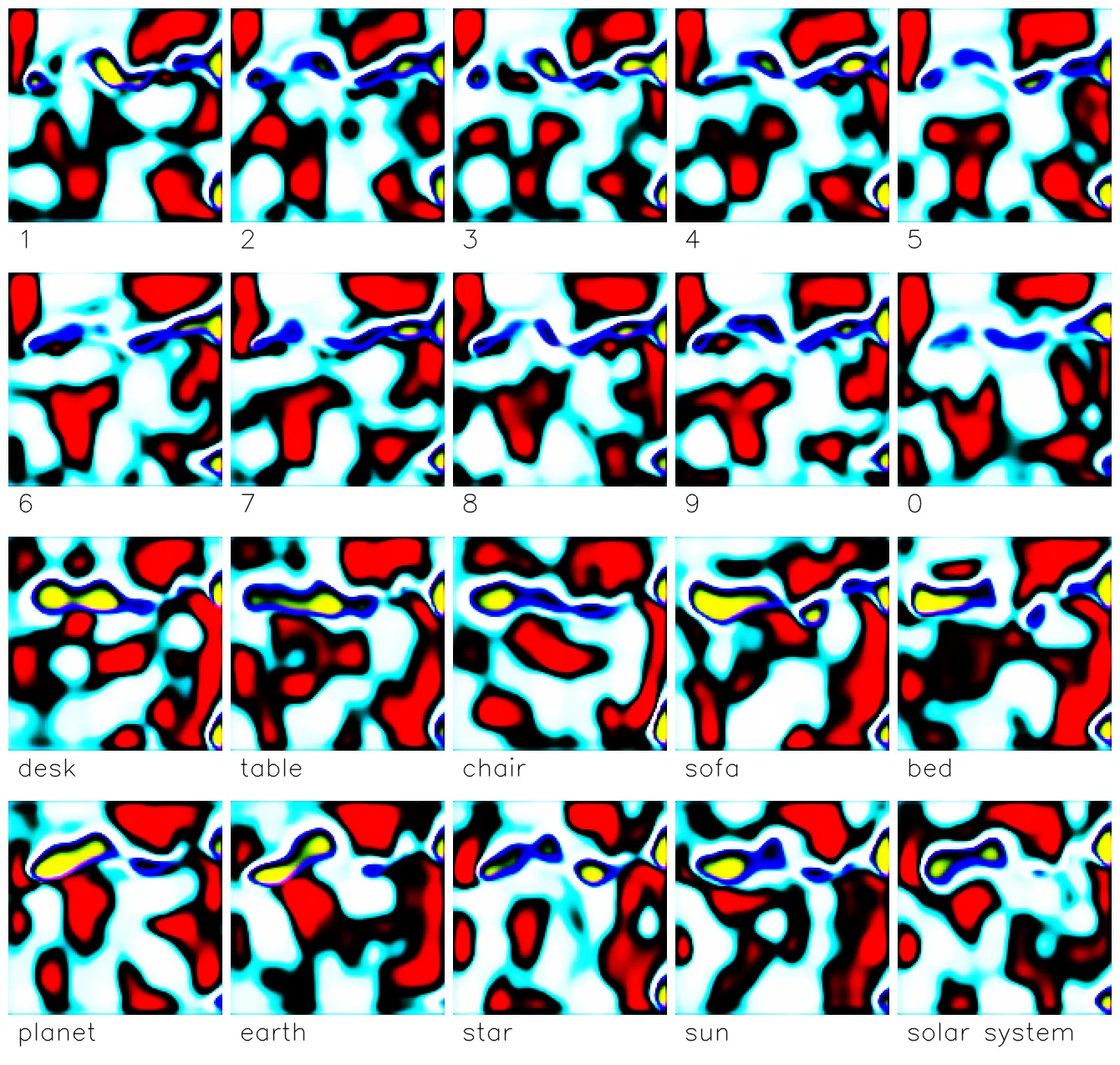

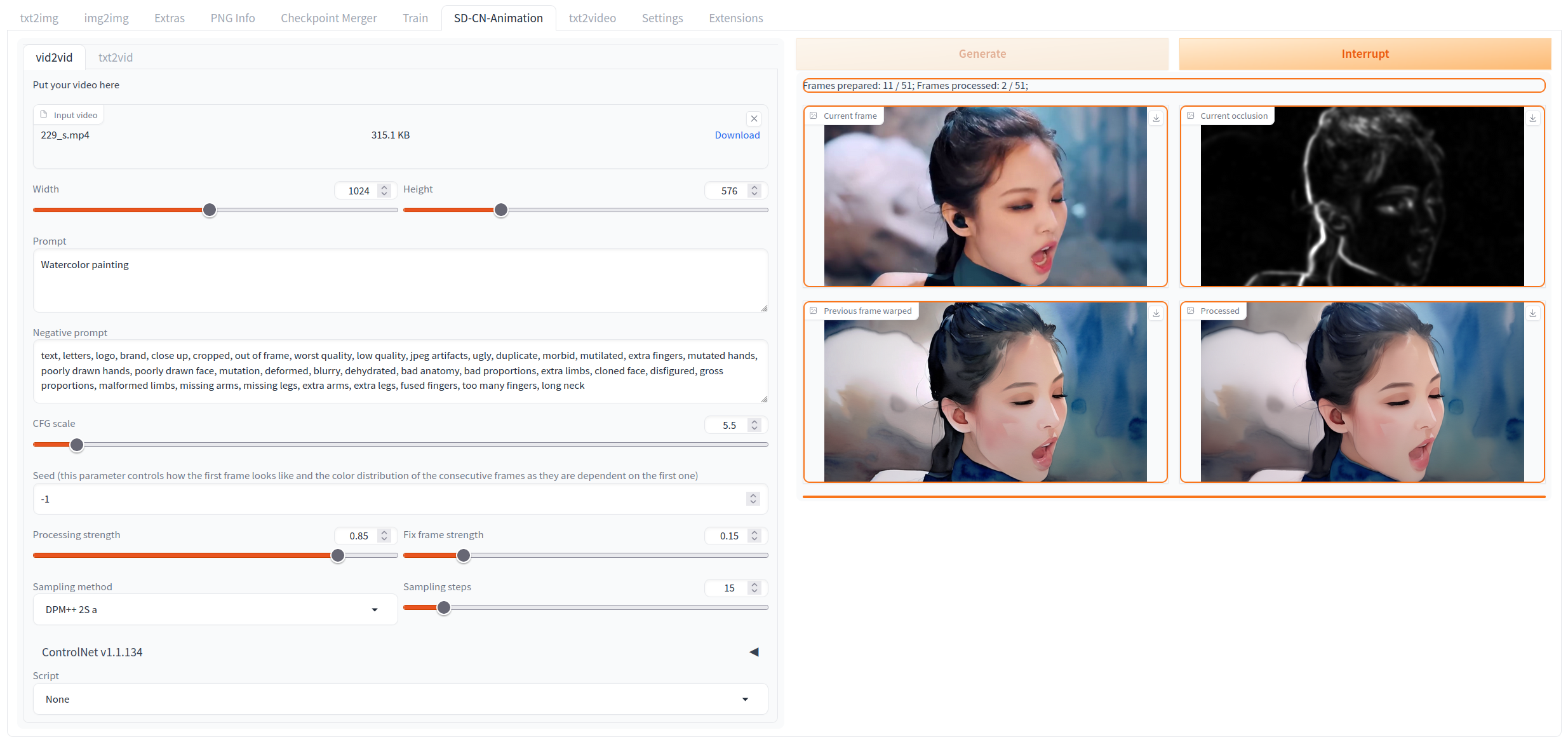

The core idea is to create a self-hosted local-first media platform where you can rate your data, and the system trains a personal model to understand your preferences. This model then sorts your data based on your predicted interest, creating a personalized filter for any type of media you might have — images, music, videos, articles, and more.

- Local First: All data always stays on your device only. All models are trained and inferenced locally.

- AI Powered: Uses advanced embeddings (CLAP, SigLIP, Jina) to understand, search and filter your content and estimate preferences.

- FullStack: Built with Flask, Bulma, Transformers, and PyTorch. Uses simple Docker setup for easy deployment.

- Open Source: AGPL-3.0 license. Contributions, feedback and support are always welcome!

Status: Active Development.