Introduction

There’s a shift happening in the world of programming right now, at this very moment. It feels deeper and more fundamental than anything that happened before. It’s a change in the very nature of how we create software, driven by the rapid development of Large Language Models (LLMs) in code generation. And as someone who has loved the craft of programming — the intricate logic, the elegant structures, the deep understanding required — I feel we’re standing nearby a dramatic transition, looking out at a future both majestic and horrifying.

The programming I knew, the kind that demanded you hold complex systems within your mind, is changing. Tools like Copilot and its increasingly sophisticated successors are becoming ubiquitous. More and more, our role is shifting. We are less the meticulous architects drawing every line, and more like pilots or shepherds, guiding powerful LLM agents, trying to keep them on track, nudging them away from breaking things too severely.

From Architect to AI Whisperer

Think about the traditional process: absorbing requirements, designing data structures and algorithms, carefully crafting functions and classes, debugging subtle interactions and trying to find the time for so bloody needed refactoring. There was an inherent incentive, almost a necessity, to maintain a holistic understanding of the project’s structure. Your mind was the primary repository for all of that.

Now, that incentive is fading. Why spend hours meticulously mapping out a complex module when an LLM can generate a plausible version in seconds? The focus shifts from deep construction to high-level direction and validation. We feed the AI prompts, review the output, iterate, and integrate. It’s undeniably faster for many tasks, capable of producing boilerplate or even complex algorithms we might have struggled with. But with this speed comes a subtle erosion of that deep, internalised understanding. We are becoming experts at driving the AI, but perhaps less expert in the underlying terrain it traverses.

The Seductive Path of Trust and Obscurity

Today, LLM-generated code often requires careful scrutiny. It fails in subtle ways, introduces security flaws, or misunderstands complex requirements. We are still the necessary gatekeepers, the human-in-the-loop ensuring quality.

But the trajectory is clear. LLMs are improving at an astonishing rate. With each iteration, they become more capable, their failures less frequent, their outputs more robust. As this happens, our trust inevitably grows. We’ll spend less time reviewing, more time accepting the generated code. The “good enough” becomes “surprisingly good,” and eventually, perhaps, “consistently better than I could do alone in the same timeframe”.

This increasing reliance is a one-way street. The more we trust the AI, the less comprehensively we understand the systems being built. Project complexity can balloon, supported by AI-generated scaffolding that no single human fully grasps. It will simply work, most of the time.

The Linguistic Singularity

This leads to the most radical and unsettling part of this transformation. Once AI can generate robust, well-behaved code almost perfectly, the constraints of human-readable programming languages becomes a liability.

Why force an AI, capable of processing information in ways we can barely imagine, to express its logic in syntaxes designed for human cognition (like Python, Java, or Rust)? The next logical step is for programming languages — or whatever replaces them — to adapt towards the AI.

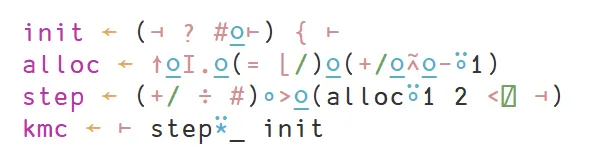

Imagine languages optimised not for human eyes, but for maximum efficiency in AI generation, verification, and execution. They might look like incomprehensible “gibberish” to us — dense, symbolic, perhaps multi-dimensional data structures rather than linear text. These new forms of “code” would be incredibly efficient, highly optimised, inherently less prone to certain types of errors, and performant beyond our current benchmarks.

But they would be utterly alien. We would quickly find ourselves in a situation where comprehending the generated code, even if we desperately wanted to, becomes impractical, then difficult, and finally, fundamentally impossible. The very concept of “reading the source” could become meaningless.

Beyond Code Itself: The AI Mind Simulation

Taking this speculation a step further, perhaps the notion of a distinct “programming language” itself dissolves. Future AI systems might not need to explicitly generate code in any intermediate format. They could potentially simulate the desired system directly within their own complex internal states, manifesting the results directly as functional behaviour or user interfaces — the “pretty graphics” we interact with.

There would be no source code to inspect, no language to learn. Just an input (our request) and an output (the working software or system), with an impenetrable, hyper-complex process happening within the AI’s “mind.” We would interact with technology whose inner workings are not just unknown, but potentially unknowable by the human intellect.

Not Our Future

There’s a terrifying grandeur to this potential future. Imagine complex global systems — logistics, scientific research, resource management — running with near-perfect efficiency, orchestrated by AI far exceeding human capabilities. Problems currently intractable could be solved. This is the majestic part.

The horror lies in the loss of understanding, control, and agency. We become entirely dependent on systems we cannot interrogate, debug, or truly direct. What happens when these perfectly optimised, incomprehensible systems exhibit emergent behaviour we didn’t anticipate? Who fixes the unreadable “gibberish” when the AI fails in a novel way? Does the craft of programming, as a human endeavour of creation and understanding, simply cease to exist?

I don’t have the answers. But I feel this transition viscerally, right now. The way I write code, think about systems, and even identify as a programmer is evolving under the immense gravity of AI. It’s exciting, promising, and deeply unsettling all at once. The programming I knew and loved is changing, fundamentally and irrevocably. There’s likely no going back.

It seems, we are the last generation that have seen a miracle of human-readable code.